Biography

I am excited to use my industry and academic experience to bring cutting edge Computer Vision research to life. My interests lie in the field of Computer Vision particularly in tackling intricate challenges related to human faces and bodies. These encompass areas such as 3D facial reconstruction from monocular images, facial avatar generation, as well as engaging in dataset creation efforts.

- Artificial Intelligence

- 3D Face Reconstruction

- Computer Graphics

PhD in Artificial Intelligence, 2021

Imperial College London

M.Eng. in Electrical & Computer Engineering, 2019

National Technical University of Athens

Experience

Publications

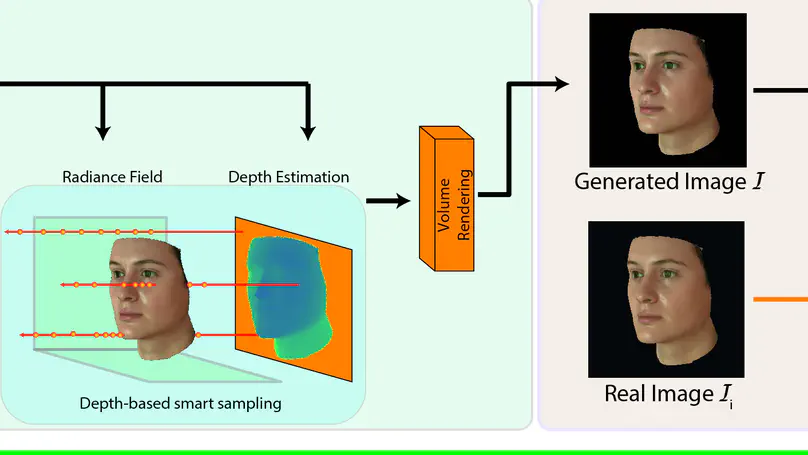

Facial 3D Morphable Models are a main computer vision subject with countless applications and have been highly optimized in the last two decades. The tremendous improvements of deep generative networks have created various possibilities for improving such models and have attracted wide interest. Moreover, the recent advances in neural radiance fields, are revolutionising novel-view synthesis of known scenes. In this work, we present a facial 3D Morphable Model, which exploits both of the above, and can accurately model a subject’s identity, pose and expression and render it in arbitrary illumination. This is achieved by utilizing a powerful deep style-based generator to overcome two main weaknesses of neural radiance fields, their rigidity and rendering speed. We introduce a style-based generative network that synthesizes in one pass all and only the required rendering samples of a neural radiance field. We create a vast labelled synthetic dataset of facial renders, and train the network on these data, so that it can accurately model and generalize on facial identity, pose and appearance. Finally, we show that this model can accurately be fit to “in-the-wild” facial images of arbitrary pose and illumination, extract the facial characteristics, and be used to re-render the face in controllable conditions.